In the first part of our overview on embeddings , we explored their fundamental nature and business value. Now, in this second and final installment, we examine the key challenges organizations face when implementing embedding technologies in real-world environments.

The Challenges: A Reality Check

While embeddings are transforming digital experiences, the path forward isn't without obstacles. As organizations rush to implement these powerful systems, several critical challenges are emerging that leaders need to understand and plan for.

The Performance Bottleneck

The very sophistication that makes embeddings so powerful also creates their biggest limitation: speed. Computing high-quality embeddings requires significant computational resources, creating latency that puts certain applications out of reach - at least for now. The Milvus benchmark revealed that embedding API latency, heavily influenced by global network effects and geographic location, is the critical bottleneck in real-world AI retrieval systems rather than model size or accuracy alone.

Real-time applications face the steepest hurdles. Speech-to-speech translation systems, where even a few hundred milliseconds of delay disrupts natural conversation flow, struggle to incorporate the semantic understanding that embeddings provide. Live content moderation for social gaming platforms - where toxic behavior needs to be caught and addressed within seconds - often must fall back to simpler, faster (but less accurate) keyword-based approaches.

Even in less time-sensitive applications, performance varies dramatically based on the complexity of the embedding model, the size of the dataset being searched, and the computational resources available. What works seamlessly for a startup with thousands of products may buckle under the weight of an enterprise catalog with millions of items.

The Cold Start Problem

Embedding systems also face the cold-start problem: they need sufficient data to understand your specific domain, terminology, and user behavior patterns. A brand-new platform won't immediately deliver the uncanny accuracy of mature systems with years of interaction data.

The Sustainability Question

The environmental reality of AI's computational demands is impossible to ignore. Embedding systems, like their large language model cousins, are resource-intensive consumers of electricity. Training embedding models requires GPU clusters running at full capacity for days or weeks. More concerning for ongoing operations, every search query, every recommendation request, every discovery interaction requires GPU computational power that translates directly to energy consumption.

For organizations committed to sustainability goals, this creates a genuine tension: the AI capabilities that drive better user experiences and business results come with a carbon footprint that's hard to justify in an era of climate consciousness. The challenge is particularly acute for consumer-facing applications with millions of daily interactions.

The Infrastructure Challenge: Why Vector Databases Matter

Understanding embeddings is only half the story. The real magic happens when you can actually use them at scale - and that requires an entirely different kind of database architecture.

Here's the fundamental problem: embeddings are useless sitting in isolation. To power search, recommendations, or discovery, you need to compare one embedding against potentially millions of others, finding the closest matches in that high-dimensional mathematical space we discussed earlier.

Traditional databases - whether SQL or NoSQL - are built for exact matching and simple comparisons. They excel at questions like "find all customers where age > 30" or "retrieve the product with ID 404." But ask them to "find the 10 vectors most similar to this query vector in 768-dimensional space" and they collapse. The mathematical operations required (calculating distances between vectors with hundreds of dimensions) would take prohibitively long, making real-time applications impossible.

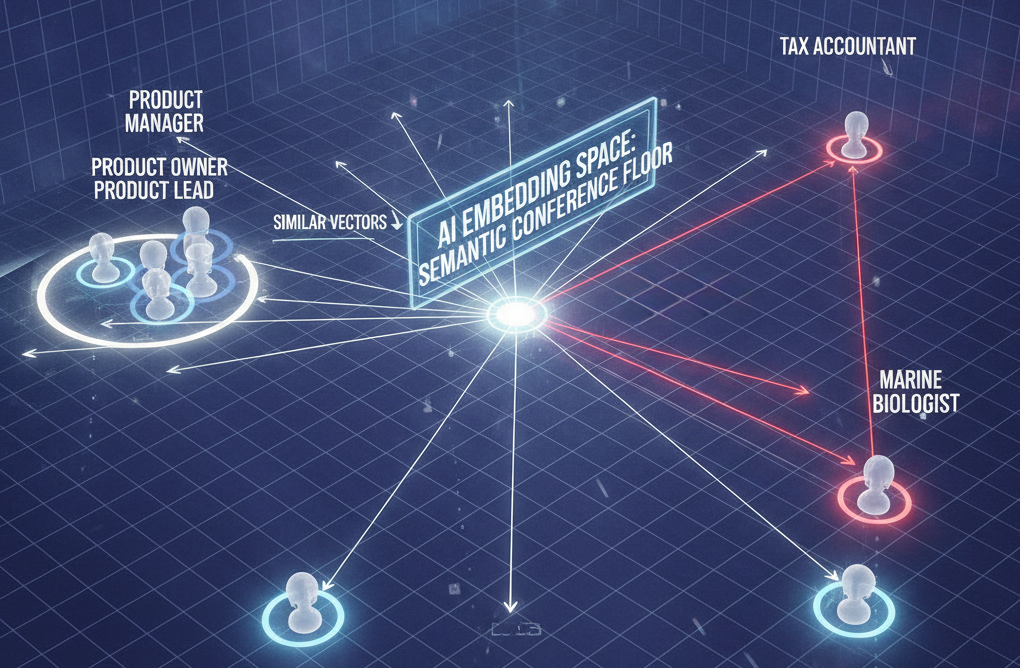

Think of it like this: using a traditional database to search embeddings is like trying to find your conference attendee with similar interests by asking people one by one if their coordinate matches yours, checking each dimension individually. It works, but you'd still be searching when the conference ends.

Enter Vector Databases

Vector databases are purpose-built for a single crucial task: finding similar vectors quickly, even when searching through millions or billions of them.

They accomplish this through specialized indexing strategies with names like HNSW (Hierarchical Navigable Small World), IVF (Inverted File Index), and LSH (Locality-Sensitive Hashing). You don't need to understand the mathematics behind these approaches - just know that they create structures that allow the database to navigate through high-dimensional space efficiently, finding approximate nearest neighbors in milliseconds rather than hours. Vector databases deserve their own dedicated article, and we'll be exploring them in depth in the future.

The Implementation Reality

Beyond technical limitations, organizations face practical challenges in deployment. Embedding systems require different infrastructure, new expertise, and often fundamental changes to existing search and recommendation architectures. The promise of better user experiences must be weighed against migration complexity, training requirements, and the risk of disrupting systems that, while imperfect, currently work.

The Silver Lining

These challenges are driving rapid innovation. More efficient embedding architectures are emerging, specialized hardware is reducing power consumption, and cloud providers are offering managed services that lower implementation barriers. The companies that navigate these challenges thoughtfully - balancing ambition with realistic constraints - will be best positioned to capitalize on embeddings' transformative potential.

What's Next

The embedding revolution is arriving - on the fast train. The question is whether you are getting on board or scrambling behind to catch up.

Look for further articles which address the challenges and cover some of these topics in a practical way.